When articles don't include the code and data required to figure out if the work is legit, they can't be trusted--or even peer reviewed.

Reminiscent of COVID: https://x.com/wideawake_media/status/1787472500776943990

_____

Crémieux @cremieuxrecueil 9h

In short, it's hard to tell what scientific work is 'legit' because so little of it includes the code and data required to figure that out.

Increasing code and data availability might be an important part of distancing ourselves from the replication crisis.

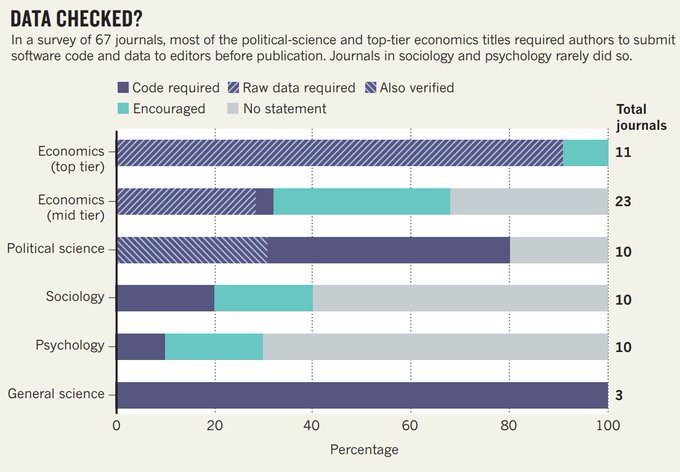

Another publication looked at 67 journals and recorded:

Across economics, political science, sociology, psychology, and general science journals, code and data requirements are rare, and checking results was only a common occurrence in political science.

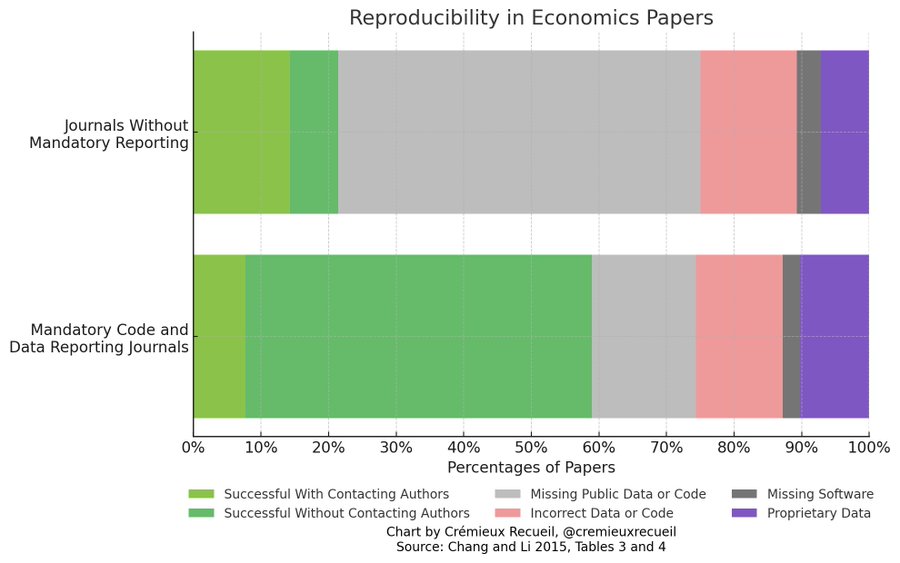

Some economists tried to reproduce the results of 67 economics papers and they pretty much couldn't do it:

Even with help from authors, only half of papers ended up being reproducible, and this was still a problem at journals with required reporting of code and data.

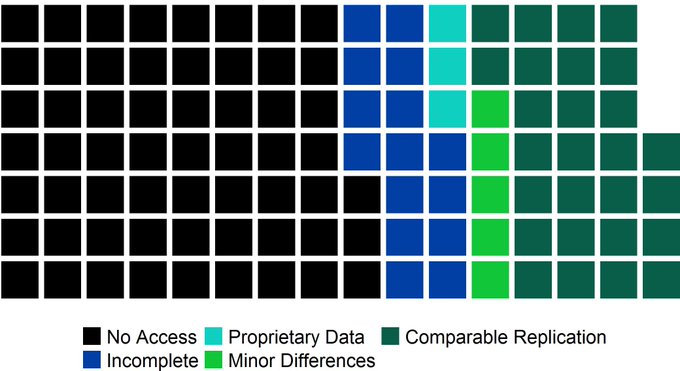

An earlier paper looked at the evidence for reproducibility in international development impact evaluations.

Similar story there: too much data was simply unavailable and some was discrepant.

No comments:

Post a Comment